clearstream

(He, Him)

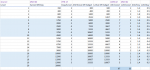

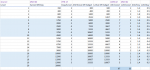

Adventuring day XP (DMG 84) suggests the XP characters might get per adventuring day (after adjustment). Using encounter thresholds (DMG 82), one can estimate out how many encounters of a given threshold might be needed for a character to go from level 1 to 20, given a few assumptions (see further below). Dividing XP costs per level (PHB 15) can then suggest a number of adventuring days to reach each level. Here is that table (updated) -

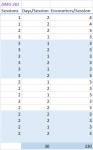

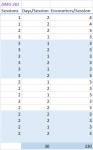

The DMG guidelines for advancement (DMG 261) allow this to be looked at in terms of sessions (updated) -

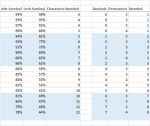

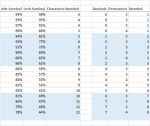

Chance of death in an encounter type can be assessed against the number of encounters that characters are likely to face, showing how many characters a party of four might need to generate in order to get one to 20th level (updated) -

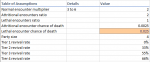

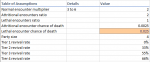

Note the possibly significant role for revival magic. Over a character's entire career, a great many encounters will be faced: magnifying mortality. Here are some background assumptions I made -

For the sake of argument, I called medium and hard encounters "attritional" because they are unlikely to do more than use up resources, and deadly ones "lethal" because they could well result in party deaths. I used the threshold for hard encounters as the XP value for attritional, and the threshold for deadly as the XP value for lethal. In play of course most encounters will fall above thresholds rather than exactly on them. My overall purpose was to answer how lethal an attritional encounter must be, versus a lethal one? To estimate that, a group needs to fix their own assumptions. I'll post this now, and in the following post, below, will discuss some values for lethality and their implications...

The DMG guidelines for advancement (DMG 261) allow this to be looked at in terms of sessions (updated) -

Chance of death in an encounter type can be assessed against the number of encounters that characters are likely to face, showing how many characters a party of four might need to generate in order to get one to 20th level (updated) -

Note the possibly significant role for revival magic. Over a character's entire career, a great many encounters will be faced: magnifying mortality. Here are some background assumptions I made -

For the sake of argument, I called medium and hard encounters "attritional" because they are unlikely to do more than use up resources, and deadly ones "lethal" because they could well result in party deaths. I used the threshold for hard encounters as the XP value for attritional, and the threshold for deadly as the XP value for lethal. In play of course most encounters will fall above thresholds rather than exactly on them. My overall purpose was to answer how lethal an attritional encounter must be, versus a lethal one? To estimate that, a group needs to fix their own assumptions. I'll post this now, and in the following post, below, will discuss some values for lethality and their implications...

Last edited: